In today’s dynamic business landscape, LLMs & Generative AI Solutions offer a myriad of advantages for organizations, empowering them to drive innovation, elevate customer experiences, and optimize operational efficiency. However, the path to practical implementation of Generative AI is not without its challenges. From ensuring data quality and responsible use to overcoming technical complexities, businesses encounter various obstacles along the way. In this article, we delve into the challenges businesses may face in adopting Generative AI and present actionable recommendations to overcome these hurdles.

Table of Contents

Generative AI Application in Business: Key Insights

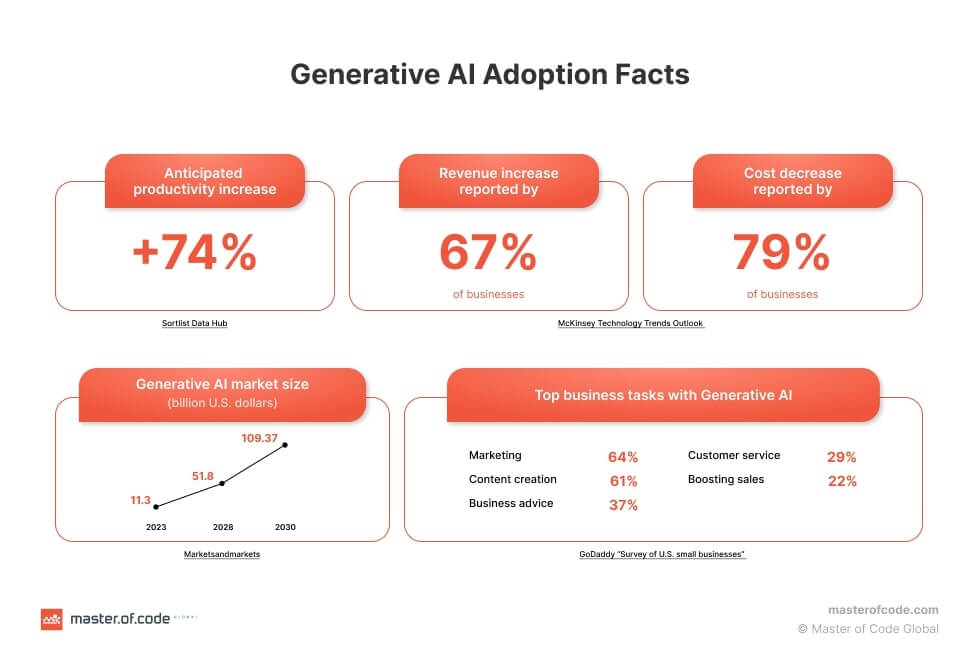

Generative AI, a powerful technology that uses machine learning to create original content, has emerged as a valuable tool for businesses across industries. Generative AI can be applied in various ways, such as automating content generation for marketing campaigns, assisting in product design and visualization, and even generating personalized recommendations for customers. Its applications span marketing, eCommerce, entertainment, and more, showcasing its potential to revolutionize business operations.

Recent research by McKinsey estimates that Generative AI has the potential to contribute $2.6 trillion to $4.4 trillion annually, and that number could even double when considering the impact of embedding Generative AI into existing systems, like Generative AI Chatbots for Conversational AI platform. This has the potential to increase the overall impact of artificial intelligence by 15 to 40 percent, presenting businesses with a significant opportunity to leverage Generative AI for transformative growth. Presently, leading players in the global Generative AI market comprise Microsoft Corporation, Hewlett Packard Enterprise Development, Oracle Corporation, NVIDIA, and Salesforce (Yahoo! Finance). However, the adoption of Generative AI is not limited to large enterprises alone. According to a groundbreaking survey conducted by GoDaddy with 1,003 U.S. small business owners, Generative AI has captured the attention and interest of small businesses. Among the respondents, 27% reported using Generative AI tools, with the most commonly used tools for business purposes being ChatGPT (70%), Bard (29%), Jasper (13%), DALL-E (13%), and Designs.ai (13%). Small business owners expressed optimism and interest in incorporating Generative AI into their operations, with 57% expressing interest in using Generative AI tools for their businesses.

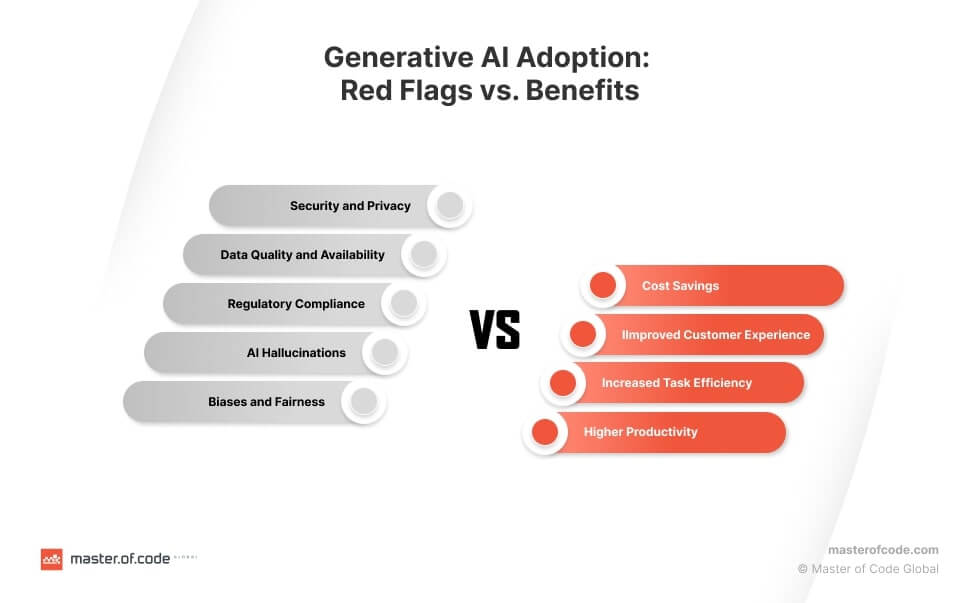

Generative AI presents significant value for businesses across industries. Its ability to automate content generation, assist in marketing, and improve customer experiences offers transformative opportunities for business operations. With the potential to contribute trillions of dollars annually and increase the overall impact of artificial intelligence, Generative AI is a technology that cannot be overlooked. However, along with the immense benefits come a set of challenges and red flags that need to be carefully considered. In the following section, we will delve into these challenges and explore the red flags associated with Generative AI adoption, particularly in Telecom, banking, and finance industries.

Generative AI Adoption Challenges: General Overview

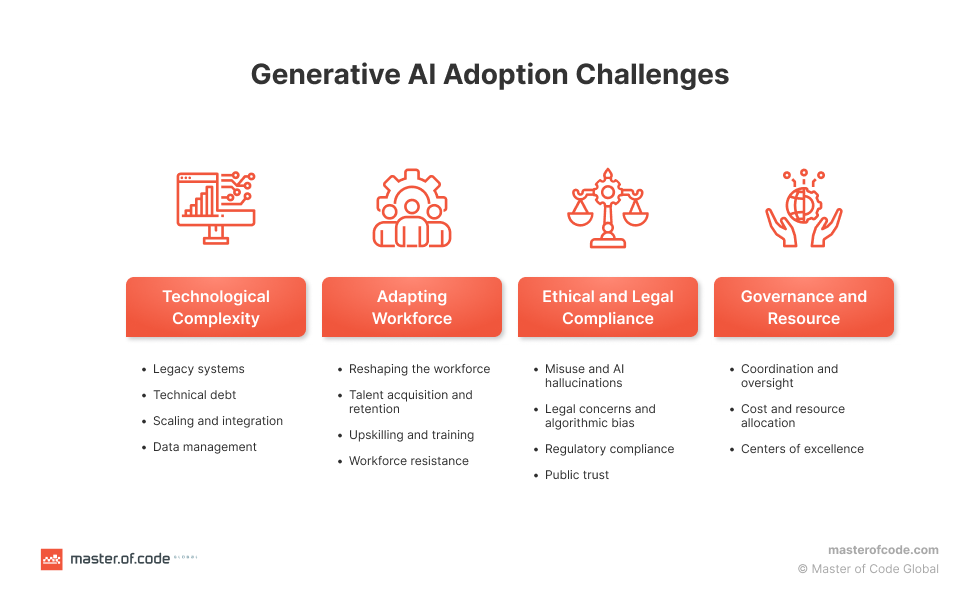

Businesses embracing Generative AI face a range of challenges during implementation, which require careful navigation. These challenges encompass technological complexity, workforce adaptation, ethical and legal compliance, and governance and resource management. Each challenge holds significant implications for successful integration and utilization of responsible Generative AI within organizations.

Key areas that require attention for successful implementation and utilization of Generative AI include:

- Technological complexity: Addressing technological complexity necessitates expertise to overcome compatibility issues, make informed integration decisions, and tackle scalability challenges. Thorough planning mitigates the accumulation of technical debt and maximizes the benefits of Generative AI.

- Workforce adaptation: Adapting the workforce involves restructuring job roles, acquiring and retaining AI expertise, and investing in upskilling initiatives. Encouraging a supportive learning environment fosters a skilled and adaptable workforce.

- Ethical and legal compliance: Ensuring ethical and legal compliance demands active monitoring and mitigation of misuse, hallucinations, intellectual property concerns, and algorithmic bias. Prioritizing regulatory compliance, data protection, and privacy safeguards promotes responsible adoption and trust-building.

- Governance and resource management: Effective governance and resource management are facilitated by robust structures such as dedicated teams or Centers of Excellence (CoEs). Efficient resource allocation for infrastructure and skilled personnel enables optimal utilization and cost efficiency, ensuring successful implementation and long-term sustainability.

By addressing these specific challenges, businesses can navigate the complexities of Generative AI implementation and leverage its full potential.

Generative AI Incorporation Red Flags in Telecom, Banking, and Finance

As businesses explore the possibilities of Generative AI adoption, it is crucial to be aware of the red flags associated with its implementation. These red flags can pose risks to security, data quality, contextual understanding, interpretability, reliability, regulatory compliance, and require substantial investment and technical expertise, especially in critical domains like generative AI in telecom or generative AI for banking. Understanding and mitigating these red flags are essential for businesses to utilize Generative AI to its full potential while safeguarding sensitive information, ensuring accurate outputs, and complying with regulatory frameworks.

Generative AI Red Flag #1: Hallucination Risk in Model Reliability

Accuracy and reliability are paramount in the financial sector, where decisions have far-reaching consequences. LLMs occasionally produce incorrect or nonsensical outputs, known as “hallucinations.” It has been estimated that the hallucination rate for ChatGPT is around 15% to 20%, which can be dreadful for companies’ reputation. These hallucinations can mislead decision-makers and lead to erroneous actions.

Examples of Hallucination Risk in Model Reliability in Generative AI:

- If a Generative AI model mistakenly provides inaccurate interest rate calculations for mortgage loans, it could result in significant financial losses for both the bank and the customer.

- If a customer inquires about available data plans in their area, the model might provide incorrect information about the availability or pricing of certain plans. This can lead to customer dissatisfaction, misinformation, and potentially loss of business for the Telecom company.

Generative AI Red Flag #2: Security and Privacy Risks

Financial institutions and Telecom companies handle highly sensitive and confidential customer data, making data privacy and security paramount. Employing Generative AI models introduces the risk of data exposure, including data breaches or unauthorized access. It has been revealed that approximately 11% of the information shared with ChatGPT by employees consists of sensitive data, including client information. The potential leakage of such data can have severe consequences, including reputational damage, financial losses, and the compromised security of customers.

Examples of Security and Privacy Risks in Generative AI:

- When utilizing Generative AI chatbots to handle customer queries, banks must ensure that customer account details, transaction data, and personal identification information remain confidential and are not inadvertently disclosed or compromised during interactions.

- If a Telecom operator deploys a Generative AI-powered recommendation system for personalized offers to customers, it must ensure that customer data, such as call records and location information, is anonymized and securely stored to maintain privacy.

Generative AI Red Flag #3: Data Quality and Availability

Generative AI relies on large volumes of high-quality data to train effective models. However, the banking and Telecom industries generate vast amounts of data, and not all of it may be relevant or useful for Generative AI.

Examples of Data Quality and Availability in Generative AI:

- Banks may collect transaction data from various sources, but not all data points may be informative for training AI models, leading to potential inaccuracies or inefficiencies in the generated outputs.

- A Telecom operator may have access to call records, network performance data, and customer feedback, but only a portion of this data is suitable for training an AI model to predict network outages accurately. Insufficient or irrelevant data can result in inaccurate predictions and suboptimal performance of the AI system.

Generative AI Red Flag #4: Contextual Understanding and Residual Risks

Generative AI models employed in the banking industry encounter difficulties in fully understanding the intricate financial context, individual circumstances, and nuanced scenarios. Similarly, Generative AI models utilized in the Telecom industry struggle to capture the complexities of customer interactions and Telecom-specific situations. Furthermore, the reliability of Generative AI models heavily depends on abundant, precise, and up-to-date training data. However, when the model lacks access to recent data, there is a heightened possibility of producing inaccurate outcomes. Consequently, these factors introduce residual risks that undermine the predictive capabilities of the model. In light of these challenges, it is not surprising that around 47% of consumers express a lack of trust in Generative-AI-assisted financial planning.

Examples of Contextual Understanding and Residual Risks in Generative AI:

- If a customer seeks personalized investment recommendations from a Generative AI advisor, the model may not account for their unique financial goals, risk tolerance, or current market conditions, leading to potentially inappropriate investment suggestions.

- If a Telecom operator relies solely on Generative AI models to forecast network congestion during peak hours, there is a possibility of inaccurate predictions that could result in poor network quality, dropped calls, or slow data speeds, impacting the overall customer experience.

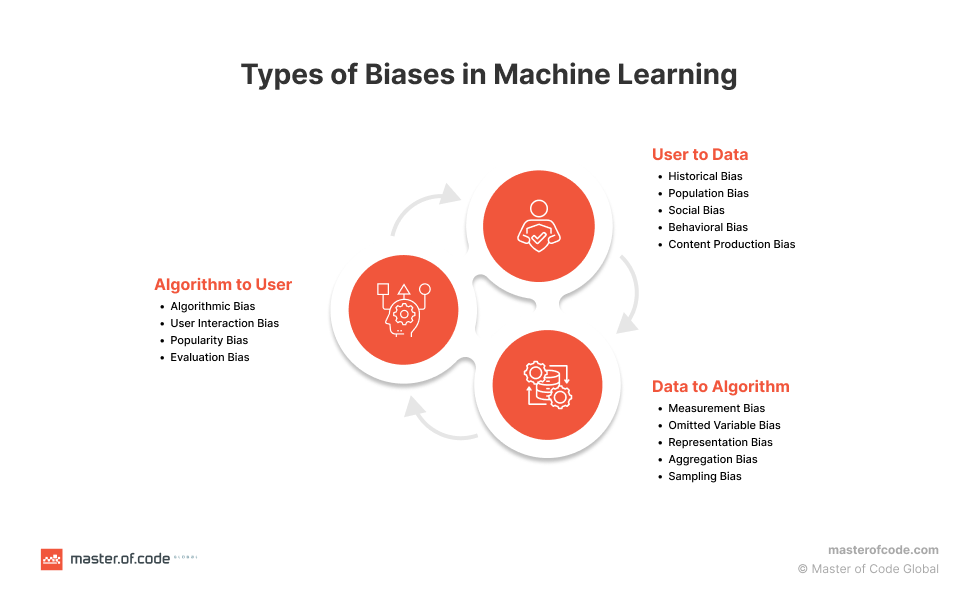

Generative AI Red Flag #5: Biases and Fairness

The financial and Telecom sectors uphold principles of fairness and equal treatment. Generative AI, driven by machine learning algorithms, relies on processing extensive amounts of visual or textual data. However, if these algorithms are trained on biased data, there is a risk that Generative AI may unintentionally perpetuate or magnify existing biases. The recent study on biases in machine learning has identified various types of biases, including measurement bias, popularity bias, evaluation bias, historical bias, social bias, etc. Addressing and mitigating these biases is crucial to ensure fair and equitable outcomes when deploying Generative AI models, with support from machine learning consulting services.

Examples of Biases and Fairness in Generative AI:

- If a Generative AI-based loan approval system exhibits bias against certain demographic groups, it would violate regulatory guidelines and ethical standards, exposing the bank to legal and reputational risks.

- If an AI-powered marketing campaign targets specific demographics based on historical data, it may overlook underserved or marginalized communities, leading to unequal access to promotional offers and services.

Generative AI Red Flag #6: Model Risk Management and Regulatory Compliance

Model risk management and regulatory compliance are critical considerations in both the banking and Telecom industries when implementing Generative AI models. Companies in these sectors must evaluate and mitigate the risks associated with model inaccuracy, misuse, or unintended consequences while adhering to regulatory guidelines.

Generative AI Red Flag #7: High Investment Requirements and Technical Expertise

Implementing Generative AI technologies often involves significant financial investments. Companies must consider whether to build their own technology or purchase licenses for integration. Moreover, successfully implementing Generative AI requires specialized technical expertise. Companies may need to acquire the necessary skills in-house by hiring additional staff or working with third-party vendors. Acquiring and retaining talent with expertise in Generative AI can be costly and competitive, particularly as the demand for AI professionals continues to rise.

Generative AI Implementation Risks Mitigation Strategies

Mitigation Strategies for Developing Custom Generative AI

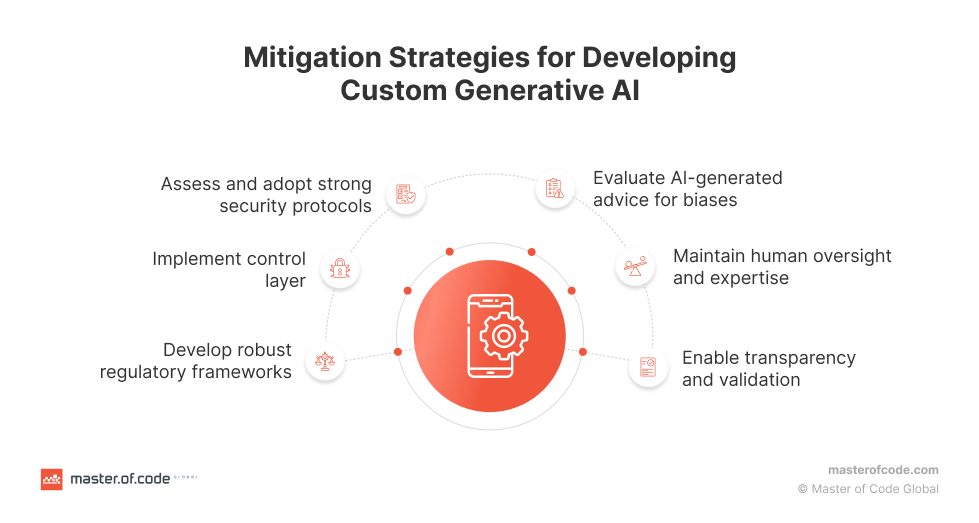

When developing custom Generative AI solutions for Telecom and banking applications, it is imperative to prioritize security, regulatory compliance, and human oversight. This section presents key strategies to address these concerns and ensure the responsible and effective implementation of Generative AI.

Developing Custom Generative AI as Risks Mitigations Strategies for Businessess:

- Assess and adopt strong security protocols: When developing custom Generative AI for Telecom and banking applications, it is crucial to conduct a thorough assessment of security protocols. This includes reviewing encryption methods, data storage practices, authentication mechanisms, and implementing strong encryption protocols, access controls, and regularly updated security measures to protect sensitive financial data.

- Implement control layer: Implement control layer that will check and investigate the answer from the LLM before sending it to the end-user. Control layer should prevent hallucination cases and do context injection during the conversation session.

- Develop robust regulatory frameworks: In the financial advice sector, collaboration between policymakers, industry stakeholders, and researchers is essential to develop comprehensive regulatory frameworks. These frameworks should address the unique challenges posed by Generative AI in financial advice, including privacy, data protection, transparency, and accountability. By establishing robust regulations, trust can be fostered, and consumer protection can be ensured.

- Evaluate AI-generated advice for biases: When utilizing AI-generated financial advice, it is important to critically evaluate it for any biases. Companies should be aware of potential biases and put in place measures to ensure fair and equitable decision-making processes. Regular evaluations should be conducted to identify and mitigate any biases that may emerge from the Generative AI system.

- Maintain human oversight and expertise: In the Telecom and banking industries, human oversight and expertise remain crucial. Companies should strike a balance between automation of business processes and human intervention to avoid costly mistakes. Human professionals should have the ability to review and validate AI-generated personalized recommendations before finalizing decisions, ensuring accuracy and minimizing risks.

- Enable transparency and validation: Organizations developing Generative AI should prioritize transparency and validation. Clear communication should be established when there is uncertainty in the AI responses, and opportunities should be provided for users to validate the generated information. This can be achieved by citing the sources used by the AI model, explaining the reasoning behind the response, highlighting any uncertainties, and establishing guidelines to prevent certain tasks from being fully automated.

Mitigation Strategies for Integrating Third-Party Generative AI

As companies embrace the integration of Generative AI into their operations, ensuring trust, accuracy, and reliability becomes a top priority. Several key factors contribute to achieving these goals and they will be discussed below.

- Train using zero-party and first-party data: To ensure accuracy, originality, and trustworthiness of Generative AI models, companies should prioritize training them using zero-party data (data shared proactively by customers) and first-party data (data collected directly by the company). Relying on third-party data can introduce inaccuracies and make it difficult to ensure the reliability of the output.

- Choose reliable partners and vendors: When integrating Generative AI into your business operations, it is important to select trustworthy and reliable partners and vendors. Thoroughly evaluate the reputation, track record, and expertise of potential partners in the AI field. Consider factors such as their experience in developing and deploying Generative AI models, their commitment to ethical practices, and their ability to provide ongoing support and maintenance. Working with reputable Generative AI integration companies can help ensure the reliability, accuracy, and compliance of the Generative AI solutions implemented in your organization.

Looking for a reliable partner in your integration journey? Master of Code Global is a trusted expert in developing and integrating advanced Generative AI solutions for the Telecom and banking industries. Whether you need to enhance your Telecom chatbot or revolutionize your financial advisory services, our experienced team can seamlessly integrate Generative AI into your existing framework.

- Maintain fresh and well-labeled data: The quality of Generative AI models heavily depends on the data they are trained on. Companies should keep their training data fresh and ensure it is well-labeled. Outdated, incomplete, or inaccurate data can lead to erroneous or unreliable results, including the propagation of false information. It is crucial to review datasets and documents used for training, refine them to remove bias, toxicity, and falsehoods, and prioritize principles of safety and accuracy.

- Incorporate human oversight: While Generative AI tools can automate certain processes, it is essential to have a human in the loop. These tools may lack understanding of emotional or business context and may not recognize when they produce incorrect or harmful outputs. Humans should be involved in reviewing the generated outputs, ensuring accuracy, identifying bias, and verifying that the models are functioning as intended. Generative AI should be seen as a complement to human capabilities, augmenting and empowering them rather than replacing or displacing them.

- Test rigorously and continuously: Generative AI cannot be set up and left unattended. Continuous oversight and testing are necessary to identify and mitigate risks. Companies can automate the review process by collecting metadata on AI systems and developing standard mitigations for specific risks. However, human involvement is crucial in checking the output for accuracy, bias, and hallucinations. Consider investing in ethical AI training for engineers and managers to equip them with the skills needed to assess AI tools. If resources are limited, prioritize testing models that have the potential to cause the most harm.

- Seek feedback and engage stakeholders: Actively seek feedback from users, customers, and other stakeholders who interact with Generative AI tools. Encourage open communication channels to understand their experiences, concerns, and suggestions. Feedback can help identify areas for improvement, address potential issues, and build trust with the user base.

Wrapping up

The adoption of Generative AI in business holds immense potential for driving innovation, enhancing productivity, and gaining a competitive edge. However, businesses must navigate various challenges related to workforce adaptation, technological complexity, governance, and resource management. In the Telecom, banking, and finance industries, specific red flags such as security vulnerabilities, biases, and regulatory compliance must be carefully addressed.

In response to the challenges faced by businesses adopting Generative AI and overall rise in Generative AI popularity, Master of Code has developed an innovative solution called “LOFT” (LLM Orchestration Framework Toolkit). This resource-saving approach, based on LLM models (such as GPT 3.5), enables the integration of Generative AI capabilities into existing chatbot projects without the need for extensive modifications.

Master of Code’s middleware seamlessly embeds into the client’s NLU provider and the model, allowing:

- Control the flow and answers coming the LLM

- Perform dynamic injection of the context from the external APIs

- Add rich (structured) content alongside with text specific for the conversational channel

With the help of Generative AI development solutions, businesses can address red flags in adoption while mitigating risks and maximizing the benefits of Generative AI technology.

Don’t miss out on the opportunity to see how Generative AI chatbots can revolutionize your customer support and boost your company’s efficiency.