Industries

Subscribe to our email newsletter

Company

Get in touch

Get in touch

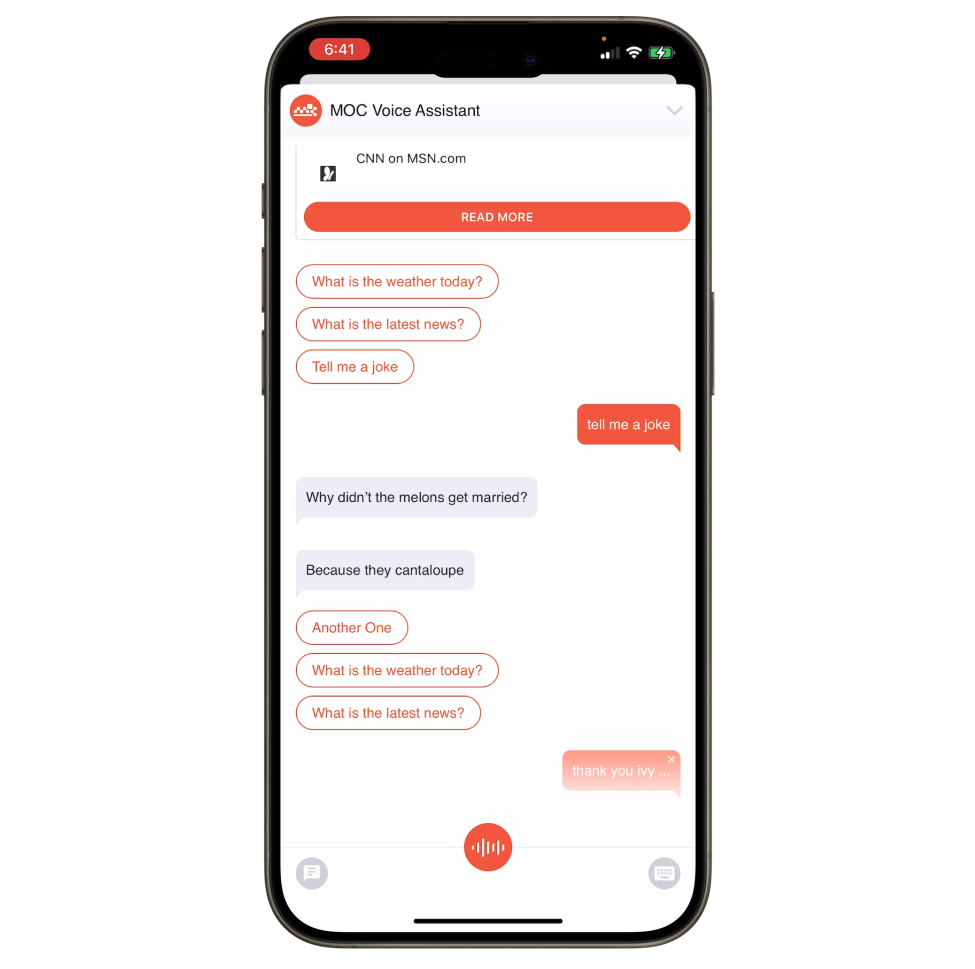

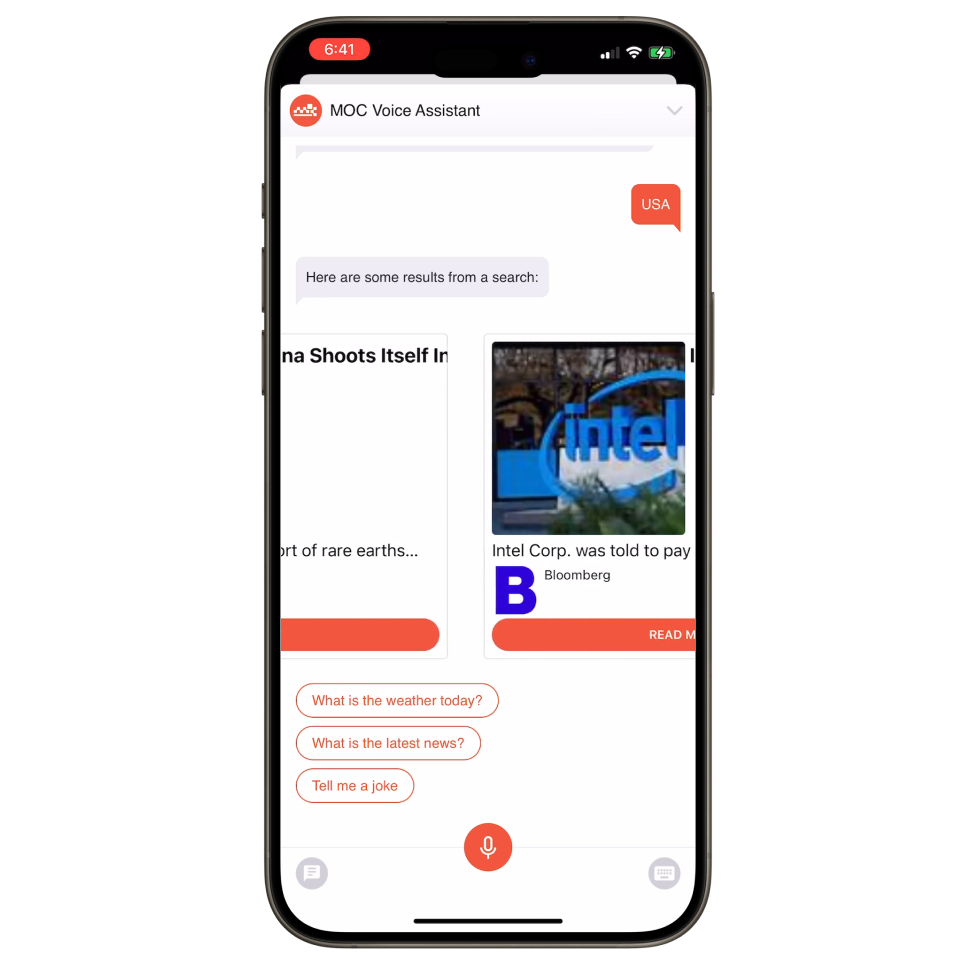

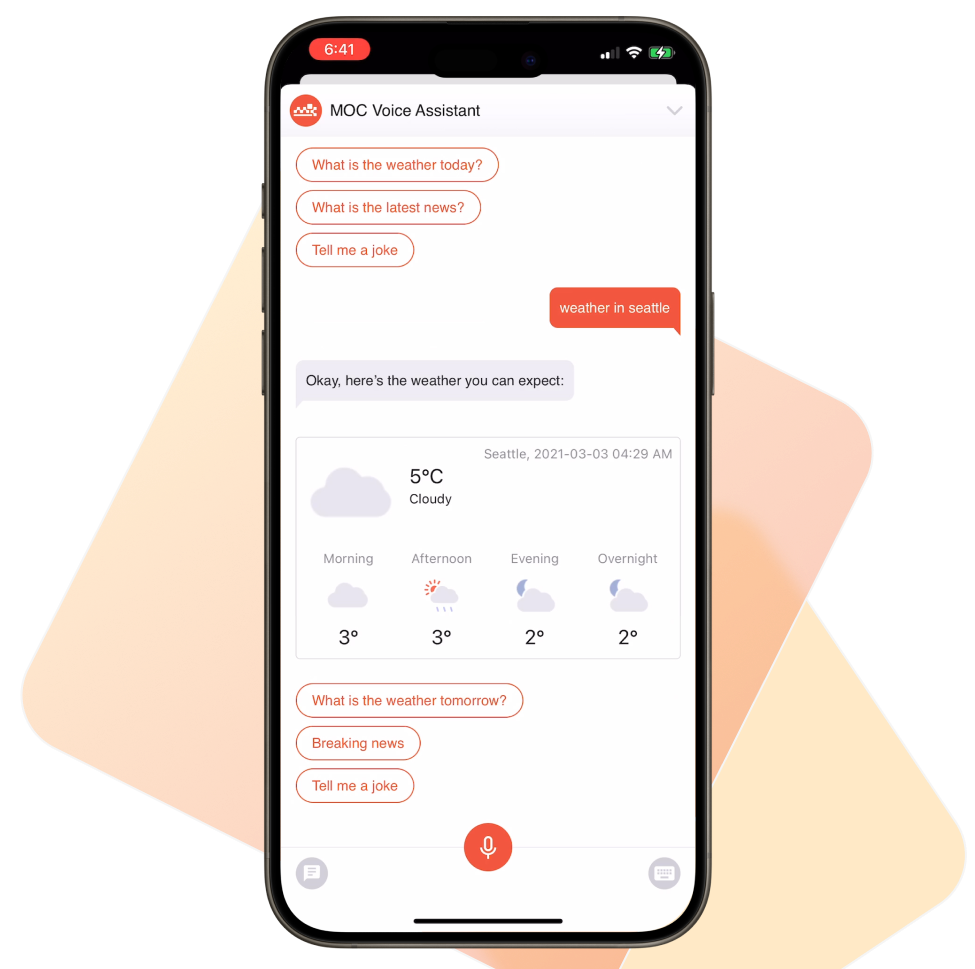

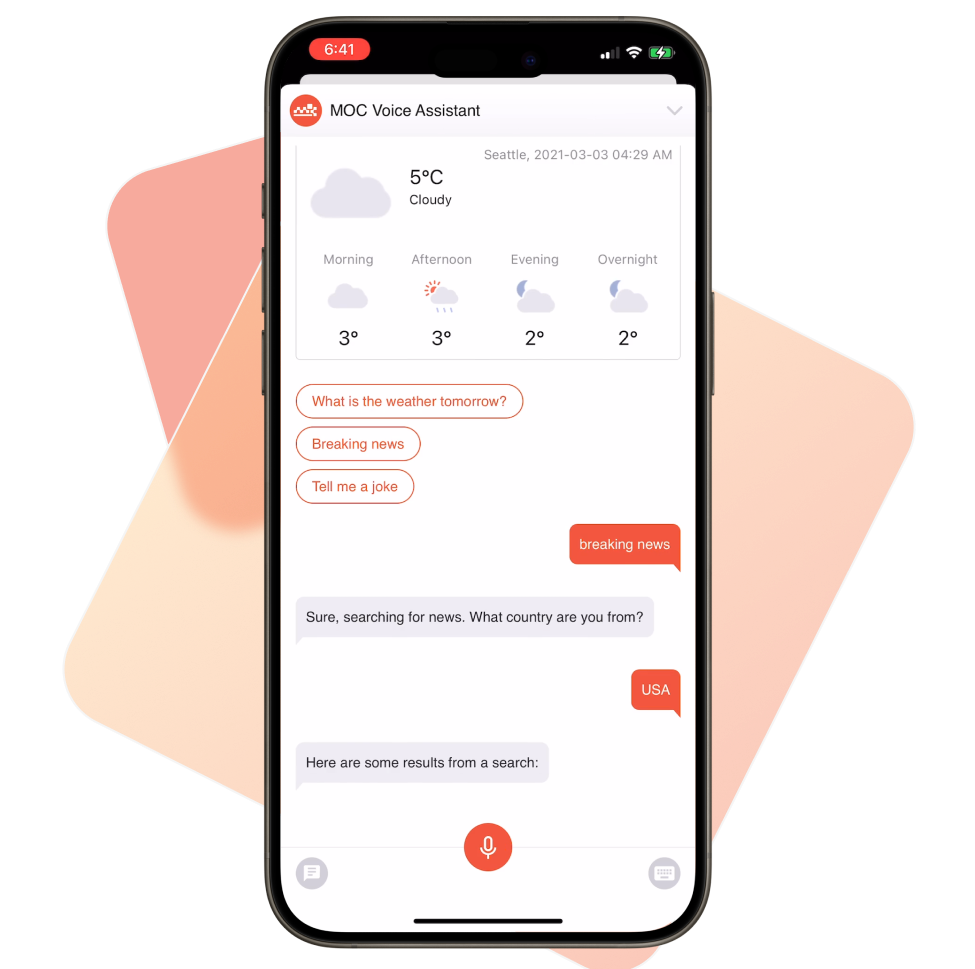

Voice is the next big thing. Adding it to digital platforms is now a must for businesses wanting to create easy-to-use experiences. Our work on creating an embeddable voice assistant explored the challenges of human-machine interaction. After focused research and development, we’ve built a solution that works across platforms, providing high-quality, hands-free services.

The digital landscape has been dominated by text-based interactions for a long time, with chatbots becoming key players. However, the potential of voice as a main interface remained largely unexplored. While traditional voice assistants like Siri offered revolutionary experiences, their isolation within native app ecosystems limited their reach. Master Of Code Global identified a significant gap in the market: a need for a versatile tool that could be seamlessly integrated into any application.

Our vision was to create a framework that would enable our developers to bring the power of voice to companies worldwide. By doing so, we aimed to change the way people interact with technology, transforming text-based conversations into intuitive and engaging speech interactions. This would not only enhance user enjoyment but also open up new possibilities for businesses to connect with their customers on a deeper level.

Developing a speech recognition system was like charting unfamiliar waters. Unlike the relatively saturated native app ecosystem, the landscape for voice assistants was an unknown territory. This presented a great challenge: how to integrate a voice-driven interface into existing applications without compromising client satisfaction. The technical issues were also tough at first. Achieving a native, lag-free interaction required precise engineering to optimize speech recognition, natural language processing, and voice synthesis. Moreover, choosing the optimal UX design was essential. How would people activate the bot? What would be the most comfortable way for them to listen to its responses? These were questions we dealt with during the design phase. To address these challenges, we conducted extensive research and testing, iterating on our design based on customer feedback. The result was a comprehensive demo of this assistant in action and the framework that we can incorporate while working on other projects.

We can quickly demonstrate to our customers the demo version of their future tool. Moreover, the framework can be used on clients’ voice-based projects to boost development.

The library was written as a native component, on SWIFT for iOS and on Kotlin for Android. It will allow our solution to be easily embeddable across native apps as well as cross-platform frameworks like Native and Flutter.

Our framework is adaptable to a wide range of industries. From enhancing eCommerce experiences with voice-guided shopping to revolutionizing healthcare with patient-centric interactions, the end solution will deliver value across the board.

MOCG helps to make existing text-based bot quickly visualize and implement a voice interface. This way, we can experiment with different hands-free interactions and gather valuable feedback.

It’s possible to flawlessly integrate voice technology directly into your native application. This assistant seamlessly blends with your platform’s design and functionality, providing a truly native feel.

Our team can easily tailor your future conversational agent to perfectly align with the brand identity and audience preferences. With its exclusive scalability and flexibility, you can enjoy full control over personality, speech speed, tone, and voice options.

Node.js

Node.js

Swift

Swift

SwiftUI

SwiftUI

Azure

Azure

Kotlin

Kotlin

Android

Android