With the advent of GPT-3 models in the field of bot building, significant changes have taken place. Even skeptical experts, on familiarizing themselves with the capabilities of LLM (Large Language Model), realized that a new era in Conversational AI development and bot-building is coming. Things will never be the same as they used to be.

So, what exactly has changed?

First, let’s recall the chatbot-building principles with “classical” machine language processing and understanding models:

- Dialogue designers shape a set of user intents that the system must detect.

- Intents, once triggered, generate specific further bot actions in the dialogue, such as a pre-generated response or an API request with Conversational AI integration to another system, payment processing, and so on.

- Intents have a clear description, for example, “I want to buy something,” and to recognize them, bot trainers train the system’s neurolinguistic model on a certain number of similar expressions that convey this intent, in order to create a stable pattern.

- Any dialog has a finite number of intents, and the user cannot get an answer to his or her question if it is not recognized by the system.

Technically, the “classical” model has the following advantage: if the intent is successfully recognized, the user receives a clearly predefined response or a certain predefined action is initiated.

However, the binary nature of the system of rigidly defined intents has some downsides: the system does not recognize the information that was not explicitly predefined by the intents in the dialog thread, and predicting scenarios for all possible variants of the user intent is a complex and demanding task for chatbot developers.

Table of Contents

GPT-3 models benefits over the traditional NLU systems for Conversational solutions

GPT-3 is a Large language model (LLM) trained on many dialogues and reference materials from almost all possible domains of human knowledge. Thanks to special algorithms for generating GPT-3 models, bots are provided with high-quality natural language processing.

A bot that uses GPT-3 models is capable of generating high-quality responses based on a given context and responding to user input in a more natural and human-like way than traditional models. While GPT-3 is not perfect, it is a significant step forward in Conversational AI development and it represents a new stage in this field. Sometimes the accuracy and quality of the answers are so impressive that a user is confused between the human agent response and the model.

Instead of a clearly defined intent, the model evaluates user input and builds a response based on probability algorithms and a ginormous amount of information. Therefore, the model can respond and maintain a dialogue on virtually any topic, as well as recognize virtually any user intent.

Having stated that, the current GPT3.5 Turbo model is an updated, more advanced high-speed version of the well-known ChatGPT project-based model. The creator showcases this model as the most sophisticated on the market, capable of producing any type of chat discussion. According to the developers, this model addresses the weaknesses and bottlenecks of the previous version, and it is trained on a massive quantity of data from numerous sources and a ginormous volume of human interactions.

These capabilities are a colossal advantage of the GPT-3 model`s family over the “classical” ones, but they are also a drawback since without special developer’s control, the model may generate false information or engage in unnecessary or even harmful dialogues with unethical users.

Where can these capabilities be used, and can side effects be avoided?

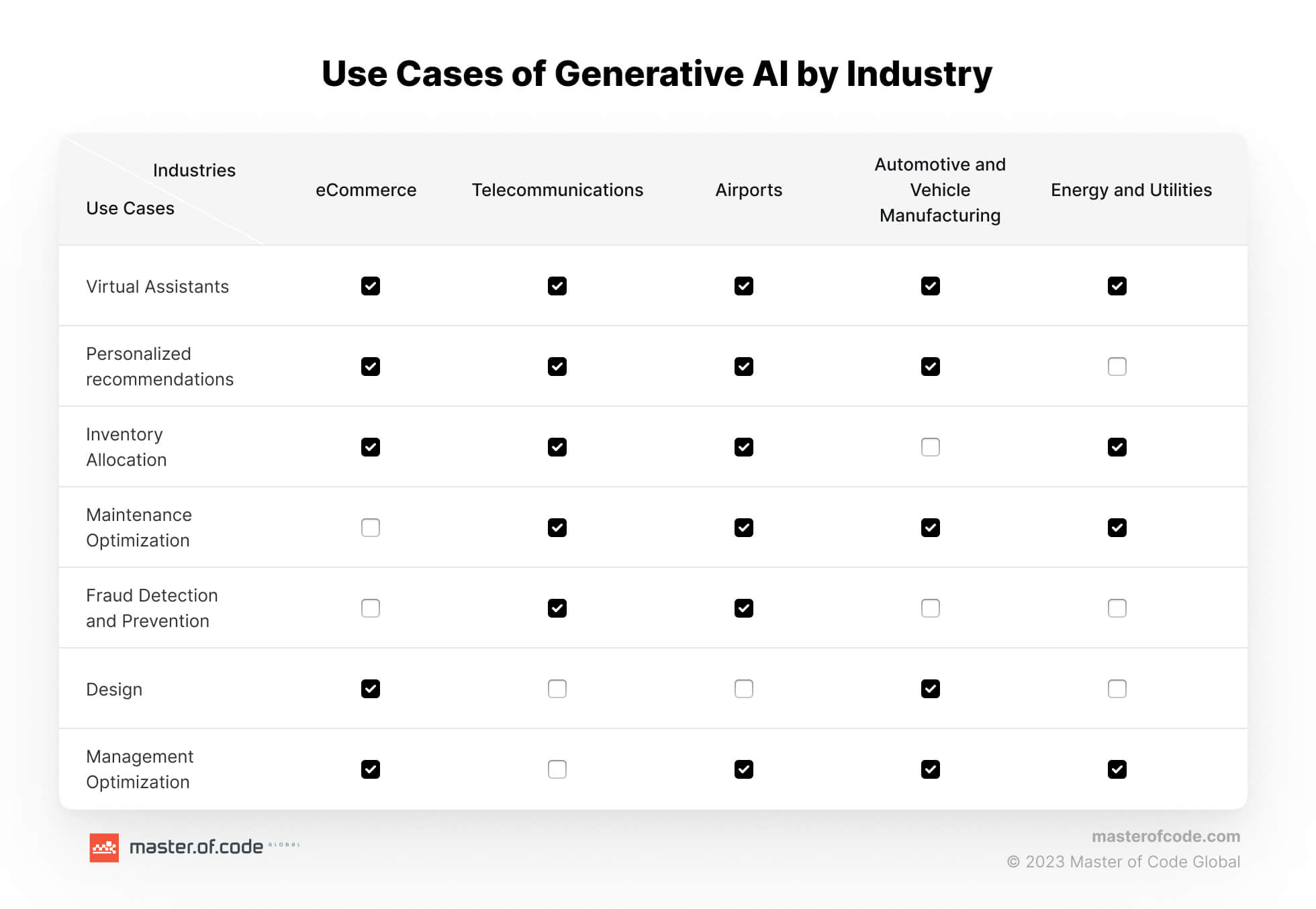

Use Cases of GPT-3 capabilities for business

Business cases for use can be varied, as generally anything currently performed by call center agents can be executed by a well-managed and controlled GPT3.5 Turbo model. Side effects can be mitigated, thanks to the newly structured dialogue language ChatML presented by the developer, which enables users to control the direction, tone, accuracy, and other parameters of the model’s responses, using combinations of instructions passed to the model with each request for response generation. This is accomplished by combining the capabilities of traditional NLU systems for user intent recognition with the capabilities of new LLM models.

Understanding the capabilities offered by the latest technology, the MOC team has actively engaged in research and development of pilot projects using it and has developed its own proposal.

Business adoption of the Large Language Model

While some companies are still working with “classical” natural language processing models for customer support and other use cases, other ambitious projects have already started to use the Generative AI capabilities of Large Language Models. Master of Code, as an experienced Conversational AI company, thinks about the advantages and disadvantages of the new technology and offers the businesses an original model for implementing Generative AI capabilities complementary to the client’s existing NLU system. Check out extended Conversational AI vs. Generative AI Comparison.

The problem for businesses behind Large Language Model adoption

Legacy System Modernization. In case the business has already implemented a conversational solution, changing the platform itself consumes a high share of a company’s IT budget and needs workforce involvement. According to Fintech Futures, 36% of banks name complex systems as a top challenge faced by organizations. The number of clients involved in most industries (Banking and Financial Institutions, Telecommunications, Retail networks), and investments in the development and training of a new platform could serve as a blocker for the system modernization.

Read also: AI Chatbots in Banking: Use Cases

The solution: “Embedded Generative AI”

Understanding all the bottlenecks and blockers for businesses, Master of Code is working on a resource-saving approach for Large Language Model Enablement, based on the latest GPT-3.5 Turbo model, called “Embedded Generative AI”. We embed our own middleware data exchange system into the client’s NLU provider and the model, or the NLG system in the text. This middleware also controls the model’s parameters. It is important to note that the middleware is not affected by the type of NLU system used by the client’s provider.

On top of that, Master of Code offers improvement of the existing dialogue flow of the client’s chatbot. It has become possible by setting up a special entry and exit points for the NLG generative flow, and special intents that interact with the responses generated by NLG and then initiating additional actions as specified by the current conversation scheme. They also have control over what information NLG receives and generates based on business rules.

“Embedded Generative AI” is an integration methodology, developed by Master of Code specialists, to build Generative AI features into a customer’s existing Conversational AI platform.

Advantages of the “Embedded Generative AI” model over Large Language Model adoption

-

- Capital preservation of existing investment in conversation solutions, since replacement with another chatbot platform is not required. Due to the specific architecture of the “Embedded Generative AI”, the client’s existing Conversational AI platform will continue to function with no modifications to the primary conversation flow. Moreover, the NLU provider will not require any configuration changes.

- Maintaining compatibility with third-party provider integrations: there is no need to break existing integration gates between conversational solutions and providers and develop new ones as the main NLU platform remains unmodified.

- Enhance the existing dialogue scheme: the work done by the dialogue designers will not be destroyed, yet NLG capabilities will add new options to tune the bot’s persona, adding voice technologies and other nuances.

- Customized approach to embedding GPT-3 model: MOC provides expertise in adjusting the NLG solution and model to correspond with the client’s unique business case domain, depending on the client’s business instructions for bot behavior, as well as model validation and continuous quality control.

- “Embedded Generative AI” model maintenance including expert support, service, and tweaking of the model during its lifetime. Each model is updated on a regular basis, which includes analyzing processed dialogs, response quality, model testing, metric analysis, and adding new business instructions or extra information to the model.

- Smooth release of “Embedded Generative AI” model. The proposed “cut-in” approach of adding an extra dialogue stream to NLG is effective since the primary functioning dialogue stream remains unmodified until the very last minute of transfer to production following thorough testing of the new system. Furthermore, if required, the entire platform may be restored to its prior condition utilizing the “traditional” conversational solution.

The brand-new “Embedded Generative AI” model, developed by Master of Code Global, allows virtually any existing bot project to be upgraded to the level of Natural language generation technology or Generative AI Chatbot – whether it is customer support, retail sales, information services, consulting, or processing systems queries – you name it. Most significantly, we recognize the value of efficiency in business, which means delivering maximum outcomes at the lowest possible cost.

Explore the advantages of Generative AI in your company. POC will be done in 2 weeks to validate the business idea!