Ethics in Conversation Design refers to the consideration of moral principles when designing and developing AI experiences. This is a topic that is not often discussed as part of the design process, perhaps because it’s difficult to talk about, or maybe is often ignored. Conversational technologies can be influenced by the biases of their creators and it’s important to consider this to ensure our designs are inclusive and transparent.

Technology made by humans is subject to the same risks and flaws as humans, and it’s naive to think that our social ills will disappear in the technology we create.

So what does that mean? Let’s look at 3 things to consider when designing for ethics and inclusivity:

Table of Contents

Biases in Conversation Design

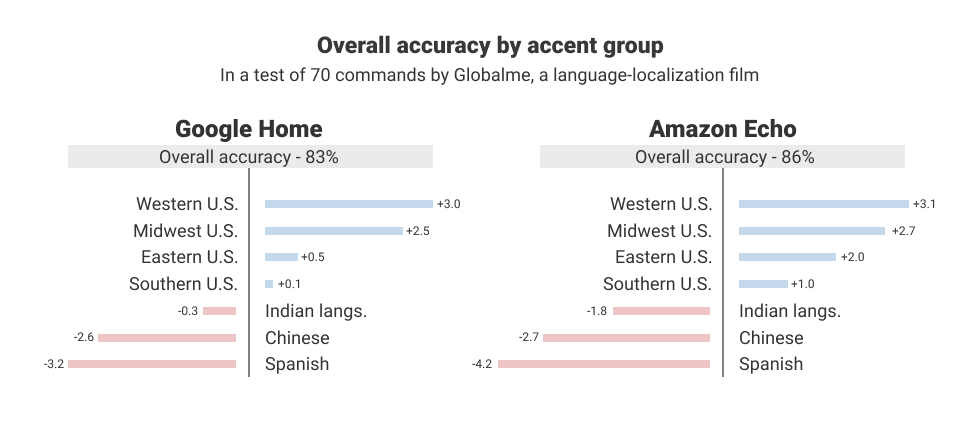

One of our biggest challenges as conversation designers is being mindful of bias. It’s something that often goes unnoticed, especially if there’s a short deadline to meet, but Conversational AI is vulnerable to social harm caused by biases. For example, the accent bias that exists in smart speakers. The Washington Post teamed up with two research groups to test thousands of voice commands dictated by more than 100+ participants across 20 cities in the United States.

The results showed that U.S southern accents were 3% less likely to get accurate responses from a Google Home speaker and Midwest accents were 3% less likely to be recognized by Alexa. But more troubling was users with non-English native accents experienced 30% more inaccuracies, facing the biggest representational harm. This is a problem because these speakers were designed to be effective in people’s lives but are creating a negative impact when users interact with them and are misunderstood.

So why did this happen? The data set of training phrases for these smart speakers were composed of predominantly white, non-immigrant, non-regional, English dialects so accents less common or prestigious were less likely to be recognized.

We as designers need to recognize our biases and make sure we’re considering ethical principles in our conversation design to help minimize social harm.

- Collect as much data about your target audience and evidence for their needs and review it with your team.

- While designing your bot persona, don’t assume everyone will like it. Does it represent a harmful stereotype? Is there verbiage that is offensive to specific groups? Does it have a personality trait that could be insulting in other cultures? Make sure to get feedback from a diverse group of users and see how they react to it.

- Use simple language when writing prompts instead of your own language patterns. What you understand may not be clear to others.

- Don’t make assumptions about what users utterances’ mean. Certain utterances like “I need to check a reservation” may not mean the same thing to everyone else.

Edge Cases in Conversation Design: Predicting the Unpredictable

Edge cases are scenarios that deviate from the happy path and are mistakenly considered very rare and unlikely to happen. Thus edge cases are written off because of this. However, dismissing these edge cases could also be dismissing how marginalized groups may interact with the solution, putting a group of users’ needs over others.

To avoid this thinking, we can ask ourselves if we’re making assumptions:

- How are we making this decision? Based on the timeline? Resources? Business needs? User evidence?

- What end-users are we ignoring?

- Do we have data to back up each edge case? If not, we’re making an assumption. If yes, then why is this an edge case?

- Who and where did we collect the data from?

Feedback and the Conversation Design Process

We want to make sure that our designs work for everyone and one of the best ways to ensure that is to collect diverse feedback. You can enlist users to help test your chatbot design or product through recruiting companies who can source very specific candidates based on demographics or through remote testing. Having this diverse user testing will help you understand users’ needs and desires and allow you to design conversations for all. This includes diversity in physical abilities, region, fluency, educational background, income, religious and cultural background, age, gender, sexual orientation, and more.

Some things to note while collecting feedback:

- Did anyone struggle to understand the goal of the conversational interaction? Was there hesitation or confusion from the user? Did anyone expect a different outcome?

- Were users able to understand the prompts and language clearly?

- How did users react to the bot’s persona?

- Were the interactions difficult for any specific group of users?

- Was there an important need that a user had that the bot couldn’t handle?

The Uncanny Valley of Conversational AI

This article has been mostly focused on designing for all users and ensuring we consider ethical principles in our conversation design. This also includes being as transparent as possible with users.

Users are not always aware of the type of data they’re given and how data is processed and stored which creates privacy concerns. It’s important for individual privacy to make sure users consent to what data they’re given and how that data is used.

We also want to avoid stepping into the Uncanny Valley. It’s crucial to let users know they’re conversing with a bot. When a chatbot or Conversational AI seems too human, it can trigger a negative response and make users uncomfortable. It can also mismanage human expectations. Saying words like “I’m designed to” or calling your bot a “virtual assistant” can help set the precedent early on. Disclosure is key. There’s even a law in California where you need to make it clear that your bot is not a human.

Remember Humans Aren’t Perfect

And neither is the technology we design, but it’s important that we try our best to catch these biases by recognizing them in the early stages of design, questioning our edge cases, and diverse user testing, because if your bot is launched into production without these considerations there may be ethical consequences.