Can you guess the number one discussed topic in business nowadays? It’s undoubtedly artificial intelligence (AI), with a particular focus on Generative AI and Large Language Models (LLMs). These technologies are not only poised to revolutionize multiple industries; they are actively reshaping the business landscape as you read this article.

It’s no surprise that businesses are rapidly increasing their investments in AI. The leaders aim to enhance their products and services, make more informed decisions, and secure a competitive edge. A Gartner poll revealed that 55% of organizations have boosted their investment in Generative AI across various business functions. According to IDC, spending on Generative AI solutions is projected to reach $143 billion by 2027, with a CAGR of 73.3% from 2023 to 2027.

Businesses worldwide consider ChatGPT integration or adoption of other LLMs to increase ROI, boost revenue, enhance customer experience, and achieve greater operational efficiency. This is where a critical question arises: What does effective adoption require? An essential component is the use of LLM orchestration. Tools like LlamaIndex and LangChain are revolutionizing the development of LLM-powered applications.

Let’s explore orchestration frameworks architecture and their business benefits to choose the right one for your specific needs.

Table of Contents

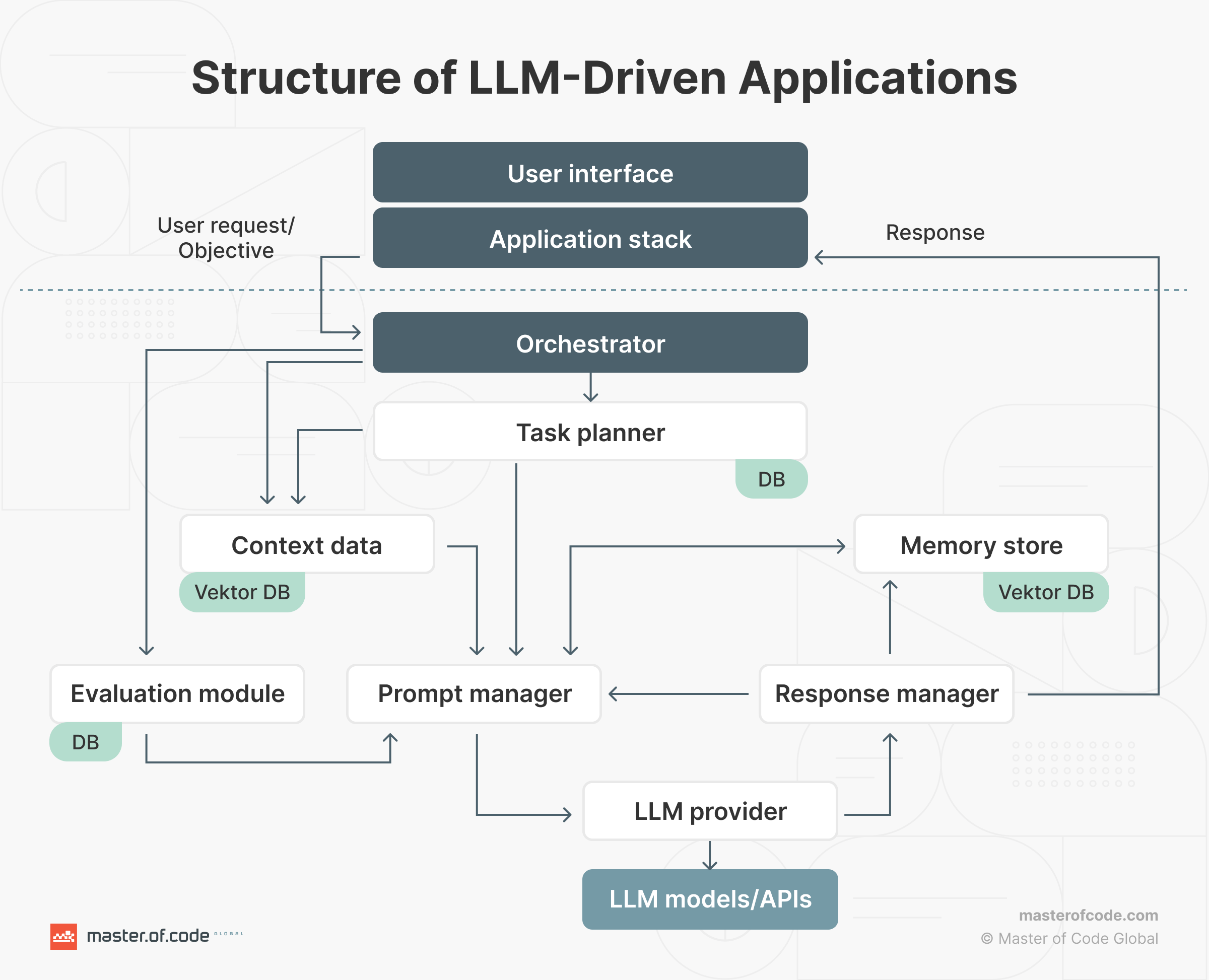

Anatomy of LLM-Driven Applications

The landscape of LLMs is rapidly evolving, with various components forming the backbone of AI applications. Understanding the structure of these apps is crucial for unlocking their full potential.

LLM Model

At the core of AI’s transformative power lies the Large Language Model. This model is a sophisticated engine designed to understand and replicate human language by processing extensive data. Digesting this information, it learns to anticipate and generate text sequences. Open-source LLMs allow broad customization and integration, appealing to those with robust development resources. On the other hand, closed-source models offer ease of use and reliable support, typically at a higher cost. There are also general-purpose models covering a wide array of tasks. Finally, domain-specific LLMs offer customized use cases crucial in fields such as healthcare or legal services.

Prompts

Prompt engineering is the strategic interaction that shapes LLM outputs. It involves crafting inputs to direct the model’s response within desired parameters. This practice maximizes the relevance of the LLM’s outputs and mitigates the risks of LLM hallucination – where the model generates plausible but incorrect or nonsensical information.

Effective prompt engineering uses various techniques, including:

- Zero-shot prompts. The model generates responses to new prompts based on general training without specific examples.

- Few-shot prompts. Model understands patterns with a few examples, generating responses to similar prompts.

- Chain-of-thought prompts. The model is guided by a few-shot sample, which helps it follow a similar thought process when generating responses to a prompt.

Carefully crafted prompts ensure accurate, helpful content with minimal missteps.

Vector Database

Vector databases are integrated to supplement the LLM’s knowledge. They house chunked and indexed data, which is then embedded into numeric vectors. When the LLM encounters a query, a similarity search within the vector database retrieves the most relevant information. This step is crucial for providing the necessary context for coherent responses. It also helps combat LLM risks, preventing outdated or contextually inappropriate outputs.

Agents and Tools

Agents and tools significantly enhance the power of an LLM. They expand the LLM’s capabilities beyond text generation. Agents, for instance, can execute a web search to incorporate the latest data into the model’s responses. They might also run code to solve a technical problem or query databases to enrich the LLM’s content with structured data. Such tools not only expand the practical uses of LLMs but also open up new possibilities for AI-driven solutions in the business realm.

Orchestrator

An orchestrator brings these components into harmony. It creates a coherent framework for application development. This element manages the interaction between LLMs, prompt templates, vector databases, and agents. The step is needed to ensure each item plays its part at the right moment. The orchestrator is the conductor, enabling the creation of advanced, specialized applications that can transform industries with new use cases.

Monitoring

Monitoring is essential to ensure that LLM applications run efficiently and effectively. It involves tracking performance metrics, detecting anomalies in inputs or behaviors, and logging interactions for review. Monitoring tools provide insights into the application’s performance. They help to quickly address issues such as unexpected LLM behavior or poor output quality.

What are LLM Orchestration Frameworks All About?

In the context of LLMs, orchestration frameworks are comprehensive tools that streamline the construction and management of AI-driven applications. They are designed to simplify the complex processes of prompt engineering, API interaction, data retrieval, and state management across conversations with language models.

They work by:

- Managing dialogue flow through prompt chaining;

- Maintaining state across multiple language model interactions;

- Providing pre-built application templates and observability tools for performance monitoring.

Business Benefits of LLM Orchestration

- Reduced time to market. Streamlines LLM integration, enabling quicker AI solution development and deployment.

- Minimized costs. Eliminates frequent model retraining, saving on computational and financial resources.

- Token economy. Manages token limitations by breaking tasks down, ensuring efficient model use.

- Data connectivity. Provides connectors for diverse data sources, aiding LLM integration into existing ecosystems.

- Lowered technical barrier. Reduces the need for specialized AI expertise, allowing implementation with existing teams.

- Focus on innovation. Enables businesses to concentrate on unique offerings and user experiences while handling technical complexities.

- Operational reliability. Observability tools guarantee optimal LLM performance in production environments.

How Does LOFT by Master of Code Work?

LOFT, the Large Language Model-Orchestrator Framework developed by Master of Code Global, is built with a modern queue-based architecture. This design is critical for businesses that require a high-throughput and scalable backend system. LOFT seamlessly integrates into diverse digital platforms, regardless of the HTTP framework used. This aspect makes it an excellent choice for enterprises looking to innovate their customer experiences with AI.

Key Features

LOFT boasts features that are geared towards creating a robust and engaging user experience:

- Seamless omnichannel experiences. LOFT’s agnostic framework integration ensures exceptional customer interactions. It maintains consistency and quality in interactions across all digital channels. Customers receive the same level of service regardless of the preferred platform.

- Enhanced personalization. Dynamically generated prompts enable highly personalized interactions for businesses. This increases customer satisfaction and loyalty, making users feel recognized and understood on a unique level.

- Robust scalability. LOFT’s scalable design supports business growth seamlessly. It can handle increased loads as your customer base expands. Performance and user experience quality remain uncompromised.

- Sophisticated event management. Advanced chat event detection and management capabilities ensure reliability. The system identifies and addresses issues like LLM hallucinations, upholding the consistency and integrity of customer interactions.

- Assured privacy and security. Strict privacy and security standards offer businesses peace of mind by safeguarding customer interactions. Confidential information is kept secure, ensuring customer trust and data protection.

- Streamlined chat processing. Extensible input and output middlewares empower businesses to customize chat experiences. They ensure accurate and effective resolutions by considering the conversation context and history.

Conceptions

LOFT introduces a series of callback functions and middleware that offer flexibility and control throughout the chat interaction lifecycle:

- System message computers. Businesses can customize system messages before sending them to the LLM API. The process ensures communication aligns with the company’s voice and service standards. Additionally, they can integrate data from other services or databases. This enrichment is vital for businesses aiming to offer context-aware responses.

- Prompt computers. These callback functions can adjust the prompts sent to the LLM API for better personalization. This means businesses can ensure that the prompts are customized to each user, leading to more engaging and relevant interactions that can improve customer satisfaction.

- Input middlewares. This series of functions preprocess user input, which is essential for businesses to filter, validate, and understand customer requests before the LLM processes them. The step helps improve the accuracy of responses and enhance the overall user experience.

- Output middlewares. After the LLM processes a request, these functions can modify the output before it’s recorded in the chat history or sent to the user. This means businesses can refine the LLM’s responses for clarity, appropriateness, and alignment with the company’s policy before the customer sees them.

- Event handlers. This mechanism detects specific events in chat histories and triggers appropriate responses. The feature automates routine inquiries and escalates complex issues to support agents. It streamlines customer service, ensuring timely and relevant assistance for users.

- ErrorHandler. This function manages the situation in case of an issue within the chat completion lifecycle. It allows businesses to maintain continuity in customer service by retrying or rerouting requests as needed. It can also alert technical teams about errors, ensuring that problems are addressed swiftly and do not impact the user experience.

LLM Orchestration Frameworks Comparison

Orchestration frameworks play a pivotal role in maximizing the utility of LLMs for business applications. They provide the structure and tools necessary for integrating advanced AI capabilities into various processes and systems. We will compare two widely employed frameworks with our own orchestrator, LOFT, to provide a comprehensive understanding of their benefits, features, and business usage.

The two most frequently employed frameworks are:

- LlamaIndex melds business data with LLMs, simplifying diverse data ingestion. It enhances LLM responses using Retrieval-Augmented Generation (RAG), incorporating business insights. Ideal for creating conversational bots, it ensures an understanding of business-specific contexts.

- LangChain provides a toolkit for maximizing language model potential in applications. It promotes context-sensitive and logical interactions. The framework includes resources for seamless data and system integration, along with operation sequencing runtimes and standardized architectures. This simplifies the creation, management, and deployment of sophisticated LLM applications for complex tasks.

Below is an analysis of the discussed frameworks across the key dimensions:

| LlamaIndex | LangChain | LOFT | |

|---|---|---|---|

| Focus | Data framework for LLM-based applications to ingest, structure, and access data | Framework for building context-aware and reasoning apps | Orchestration framework for integrating various LLM services |

| Use cases | Q&A, chatbots, agents, structured data extraction | Document question answering, chatbots, analyzing structured data | Chatbots, virtual assistants, customer service applications |

| Major advantages |

|

|

|

| Scalability | Handle large amounts of data and concurrent requests while maintaining low latency and high throughput | Efficiently process critical requests even during peak periods | Well-suited for handling large volumes of requests and increasing workloads |

| Flexibility | Extensible architecture and data handling capabilities | Modular design and support for custom components | Flexible event handling, prompt computing, and middleware extensibility |

When selecting from these frameworks, businesses should consider their specific needs. Considerations include the type of data, reasoning complexity, and unified management of LLM services. As Master of Code, we assist our clients in selecting the appropriate LLM for complex business challenges and translate these requests into tangible use cases, showcasing practical applications.

Wrapping Up

The proper AI orchestration framework, like LOFT, can streamline the adoption of LLMs. An orchestrator also boosts their efficiency and output quality, which is crucial for chatbots and digital assistants. As the digital landscape evolves, so must our tools and strategies to maintain a competitive edge. Master of Code Global leads the way in this evolution, developing AI solutions that fuel growth and improve customer experience.

LOFT’s orchestration capabilities are designed to be robust yet flexible. Its architecture ensures that the implementation of diverse LLMs is both seamless and scalable. It’s not just about the technology itself but how it’s applied that sets a business apart. Master of Code employs LOFT to create personalized AI-driven experiences. We focus on seamless integration, efficient middleware solutions, and sophisticated event handling to meet enterprise demands.

As we look towards the future, the potential for AI to redefine industry standards is immense. Master of Code is committed to translating this potential into tangible results for your business. If you’re ready to get the most out of AI with a partner that has proven expertise and a dedication to excellence, reach out to us. Together, we will forge customer connections that stand the test of time.

Find out how LOFT can elevate your ROI and increase revenue with effective LLM integration.